How to Use AI to Improve WordPress SEO

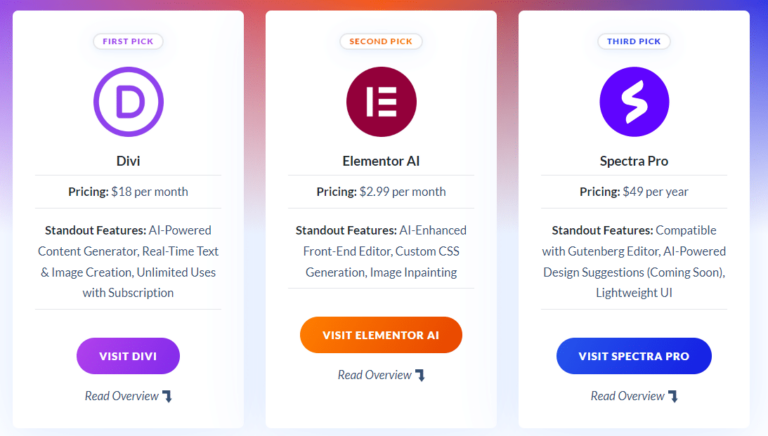

Want to take your WordPress SEO to the next level? With the help of AI technologies, you can easily elevate your WordPress SEO game. AI SEO tools like Semrush, Divi AI, and Rank Math can help you create high-quality content that search engines love. In this article, we’ll walk you through the steps you need